Learn about the distributed techniques of Colossal-AI to maximize the runtime performance of your large neural networks.

Features

Colossalai

Colossal-AI provides a collection of parallel components for you. We aim to support you to write your distributed deep learning models just like how you write your model on your laptop. We provide user-friendly tools to kickstart distributed training and inference in a few lines.

Parallelism strategies

Data Parallelism

Pipeline Parallelism

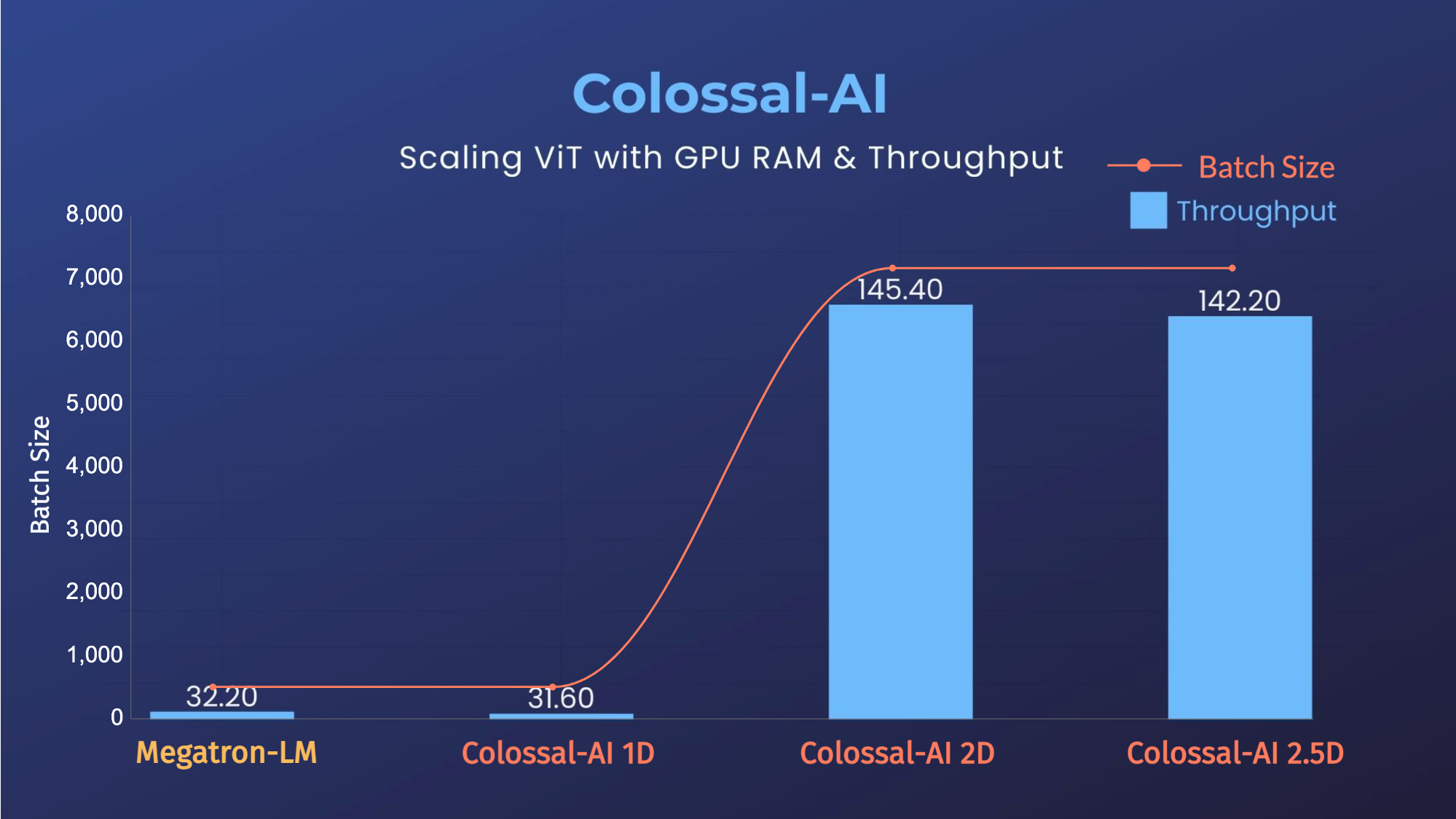

1D, 2D, 2.5D, 3D Tensor Parallelism

Sequence Parallelism

Zero Redundancy Optimizer (ZeRO)

Auto-Parallelism

Heterogeneous Memory Management

PatrickStar.

Friendly Usage

Parallelism based on the configuration file

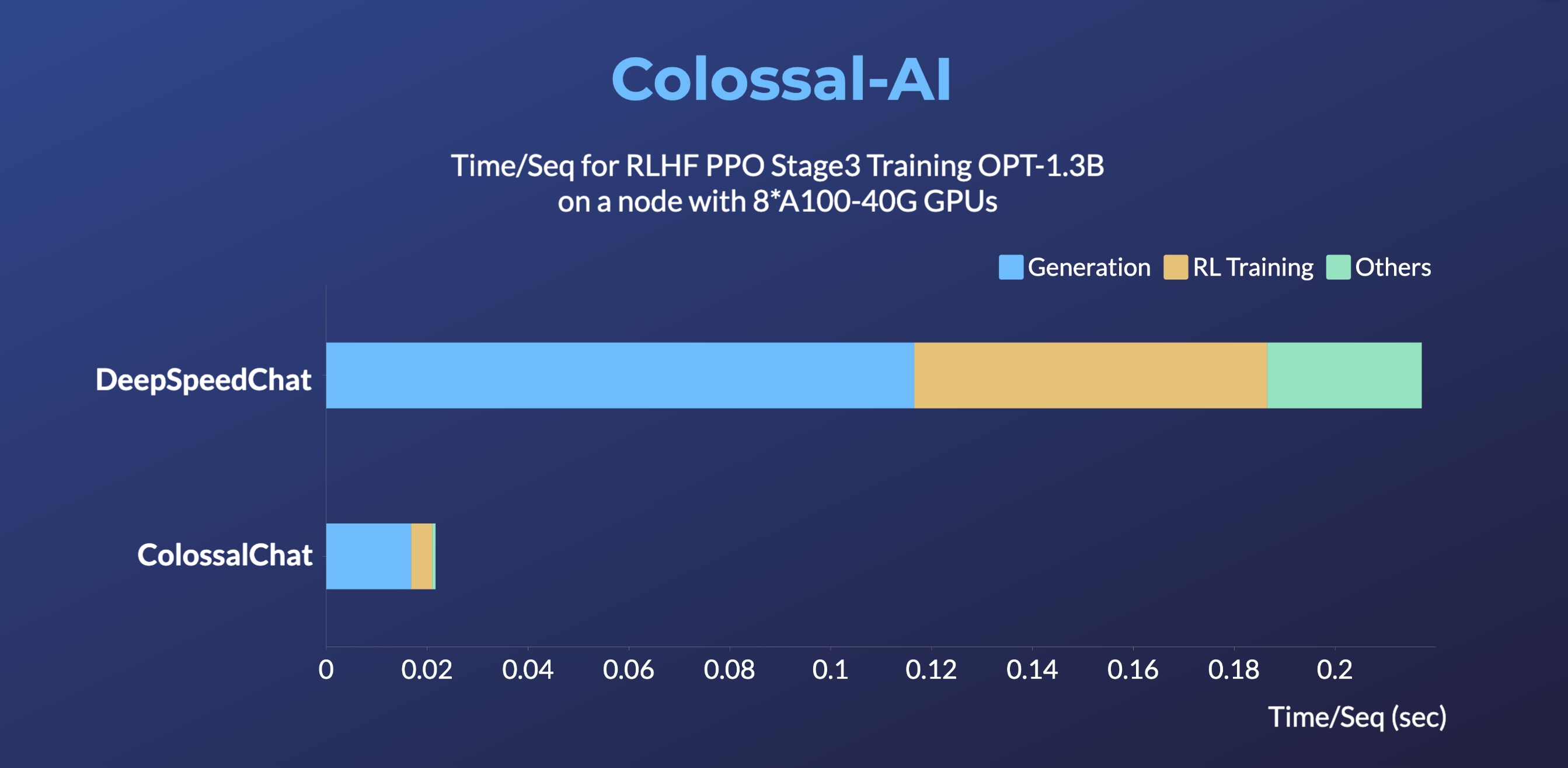

ColossalChat:

An open-source solution for cloning ChatGPT with a complete RLHF pipeline. [code] [blog] [demo] [tutorial]

Parallel Training Demo

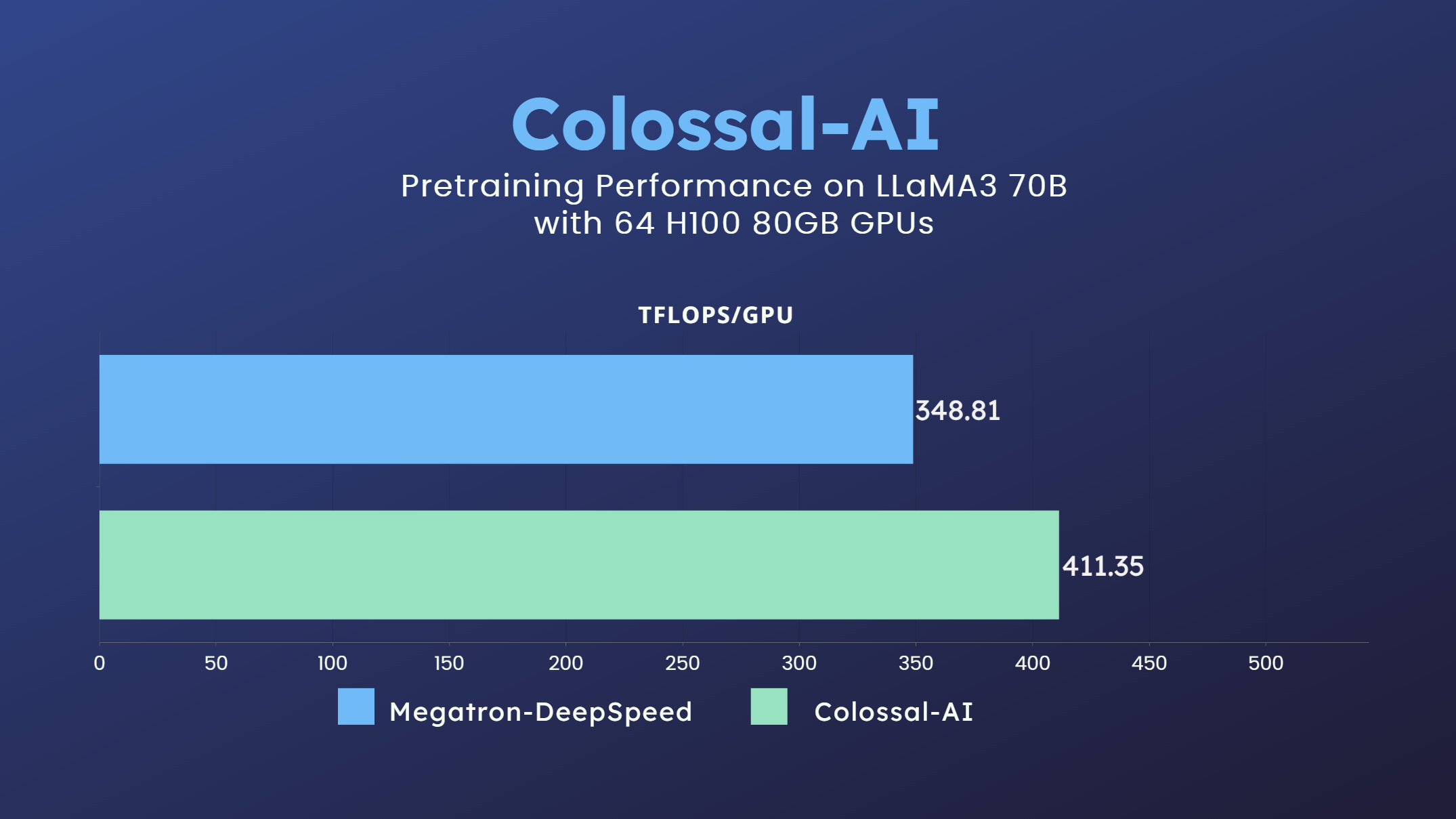

70 billion parameter LLaMA3 model training accelerated by 18%